Tweets

-

Replying to @lauriewired

Apparently scripting on mpv is quite powerful, so wondering if https://github.com/chachmu/mpvDLNA could be a solution. Asking for help on https://old.reddit.com/r/mpv/comments/1i11wb4/javascript_scripts_on_mpvandroid_from_fdroid/

(original)

-

Nature, the gift that keeps on giving.🌱

PS: more pesto coming! 🤩🫙 https://x.com/utopiah/status/1865014325083021769

(original)

-

Replying to @SadlyItsBradley

is this coherent with what you saw? Sorry if it’s obvious I just didn’t pay attention to updates for a little while but somehow yesterday the idea seemed obvious.

(original)

-

Replying to @utopiah

It’d definitely pay money for another Valve HMD (still enjoying the Index BTW, and can’t wait for Half-life: Alyx 2 ;) knowing that it helps unlock quality XR content outside of a gatekeeping store that is splintering XR.

(original)

-

Replying to @utopiah

So… can Valve repeat what they did with Proton and remove OS lock-in for an entire platform, namely Android, and have it working elsewhere, including on its own hardware but not limited to it?

(original)

-

Replying to @utopiah

Also AndroidXR is coming, with manufacturers announcing support.

That means… potentially supporting a lot of content that would normally not be available on Steam.

That also means if there another hardware beyond the SteamDeck, XR hardware, it might repeat the pattern.

(original)

-

Replying to @utopiah

and the initial thought is, and to be honest probably the right one, to bring Android content on Linux too.

But… what OS does Quest runs on? Android.

A lot of content for XR is on Steam, and works well, with and without Proton, but a LOT is NOT on Steam because of that.

(original)

-

Replying to @utopiah

For a bit more context, Valve has been working on Proton for a while. Beyond Wine, it makes running Windows executables, specifically games, work on Linux. That means not just the current version of Windows but even older ones.

They are now apparently working on Waydroid…

(original)

-

So… @valveSoftware is single-handedly making gaming on Windows a thing of the past. The irony being that gaming on Linux is now not just more stable or more performant but even runs MORE stuff thanks to compatibility layers.

But… what if the Android move was… also for XR?!

(original)

-

Looks like a gap.

(original)

-

Replying to @lauriewired

PS: years of using VLC much daily, I just can’t remember it crashing. Not even once. I have used mpv for barely a day and… freeze.

I can’t deduce anything about security but the codebase as an argument seems flawed when my first hand experience is so strikingly different.🤷♂️

(original)

-

Replying to @andy_matuschak and @michael_nielsen

PS: hardware wise using reMarkable Pro daily and occasionally PineNote, no DRM content on either.

(original)

-

Replying to @andy_matuschak and @michael_nielsen

Same here, not a lawyer but IMHO a morally justified pragmatic take, especially in (sadly) times of oligopolies.

Also an occasion to share https://www.defectivebydesign.org

(original)

-

Replying to @lauriewired

Might try with https://github.com/varbhat/hyperupnp but seems a bit convoluted. Hopefully there is no need to download file locally and it can pass to mpv the URL of the stream to open. Suggestions welcomed.

(original)

-

Replying to @lauriewired

Seems not https://github.com/mpv-android/mpv-android/issues/918 nor planned. Still curious about alternative browsing for non local files.

(original)

-

Replying to @lauriewired

Not familiar with mpv but does it beyond opening by URL, at least the Android version, support DLNA including browsing? If not, does it support browsing of any networked filesystem?

(original)

-

Replying to @utopiah

Just like slop the problem is shallower than we are led to believe. It’s not about an AI uprising, it’s “just” the usual race for money. The simple greed leading some to extract money regardless of the means.

Very boring, very “normal”, AI or not.

(original)

-

“[Los Angeles’] fire & police departments don’t invest in technology [sic] hopefully more people build AI robotics solutions for monitoring or help. Instead a lot of people in AI are building military solutions. Aka putting a gun on top of a robot dog,” https://www.404media.co/hollywood-sign-burning-ai-images-la-wildfire/

(original)

-

Replying to @utopiah

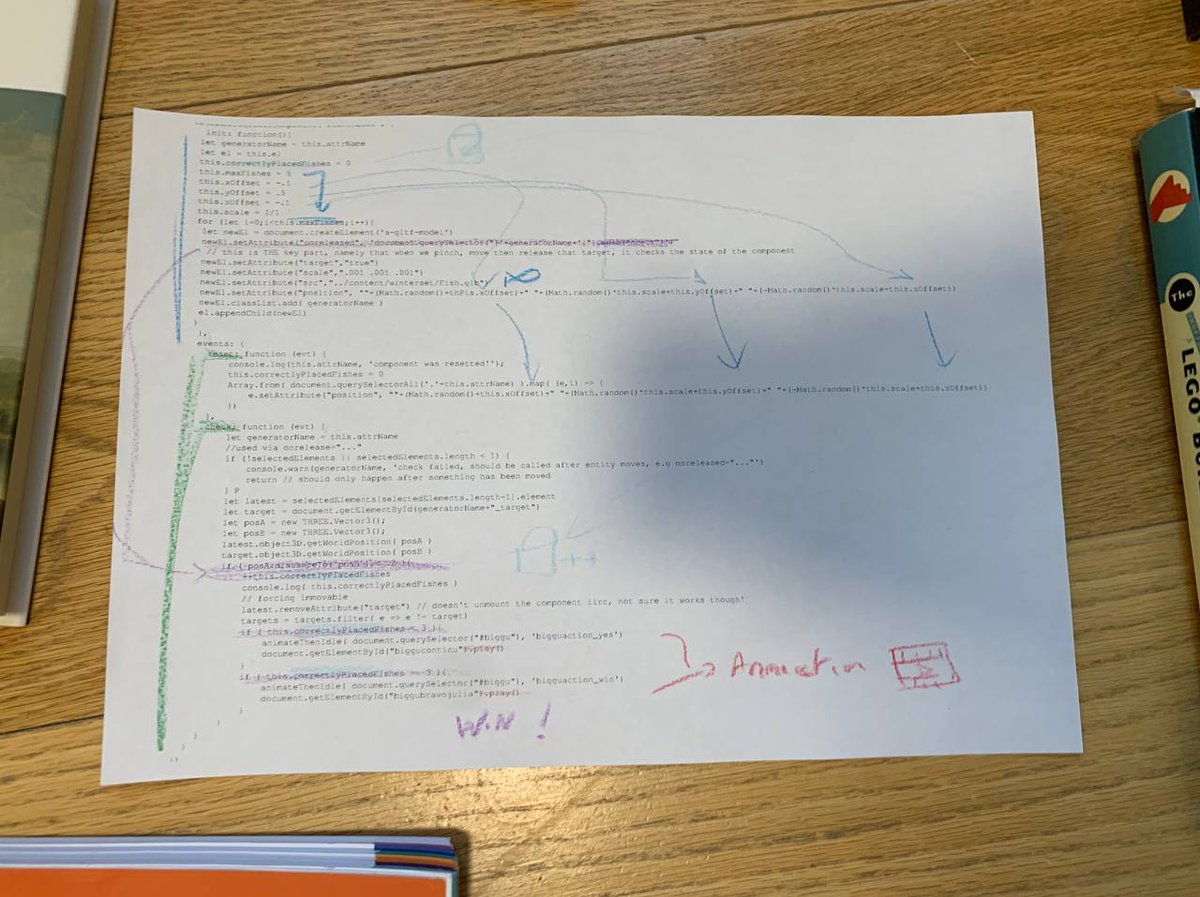

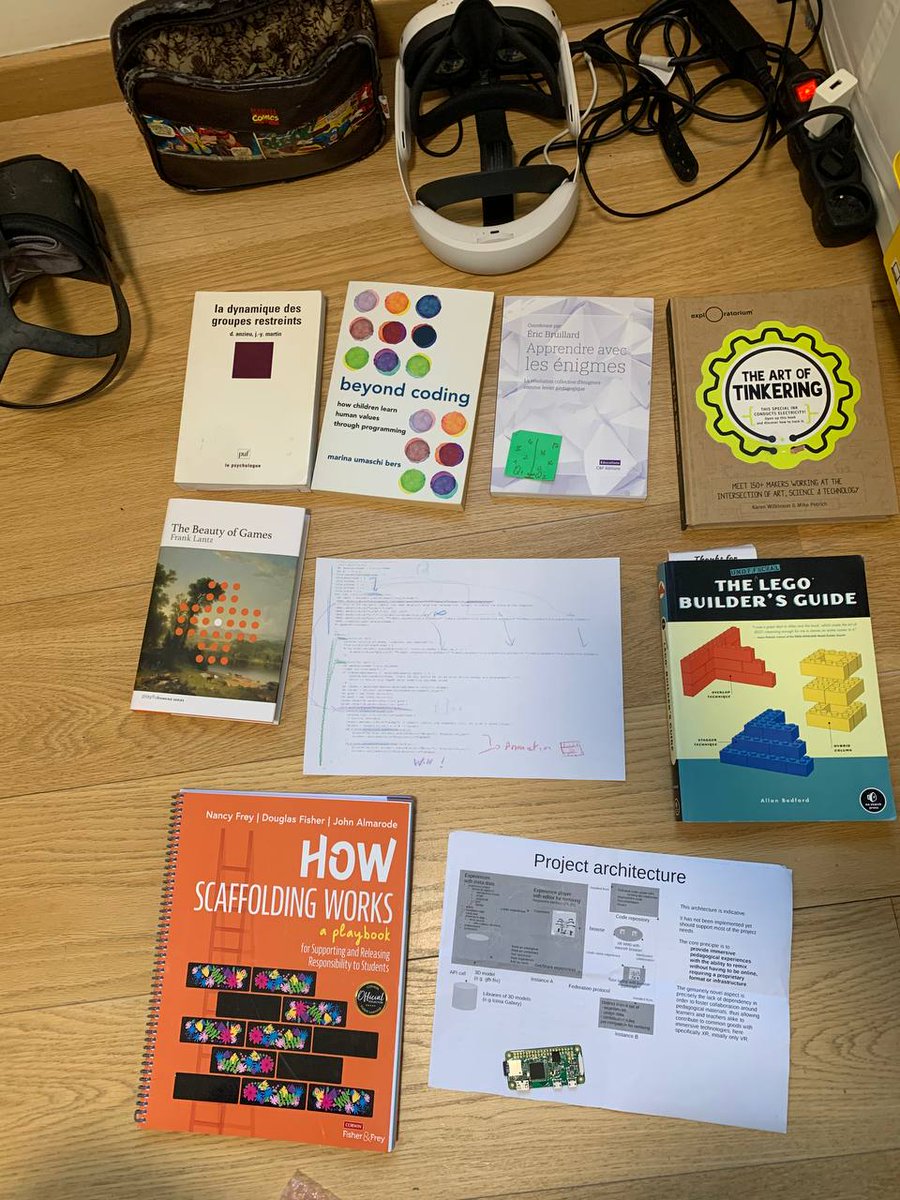

I’ll be explaining https://video.benetou.fr/w/4i2LaJCiZbtZE4MZEnRTLk with the hope of kids suggesting improvements or other mini games. I’ll try to go a video of the process in English to get more ideas from you all. Stay tuned.

(original)

-

Tomorrow leading a workshop on prototyping in XR for kids. The example will be based on a mini game for pedagogical purpose. Printed in and annotated with colors and sketches, let’s see how it goes!

(original)

-

RT @Boenau: This is the best video describing Car Brain I’ve seen.

(original)

-

Replying to @utopiah

Inspired by https://x.com/abacaj/status/1877387461032030285 which leads to interesting discussions, people arguing how truly all parts should be automated. As if… nobody writing software wants to do anything manually, it’s just in practice very hard to automate most things, hence trying first.

(original)

-

Designing an agent? Name it Mechanical Turk. Because… truly, it’s software design and user testing all the way down.🤷♂️

(original)

-

Replying to @Bhushanwtf

Thanks for the clarification, your decision makes sense!

(original)

-

RT @FrontYoungMinds: Ever made #decisions in a short time, or with a lot of uncertainty? Your brain probably didn’t use logical #reasoning…

(original)

-

Replying to @utopiah

IMHO and naively, it seems to respect https://fabien.benetou.fr/Analysis/AgainstPoorArtificialIntelligencePractices so I’d argue it’s the “good” kind of AI implementation.

(original)

-

Replying to @utopiah

PS: I donated to VLC few times and I do use it daily. Great project. Does it need to ride the AI hype cycle? I’m not sure, but at least this seems potentially useful in the few cases where subtitles aren’t actually available (which is surprisingly rare, luckily).

(original)

-

“open source AI models” … and it’s STT again.

Cf https://x.com/utopiah/status/1876176247941931144 i.e. I really believe it’s either pointless LLM (where hallucinations are hidden the rug) or GenAI (where attribution is ignored) or actually useful … but just “AI” as “boring” speech to text. 🤷♂️ https://x.com/videolan/status/1877072497146781946

(original)

-

Replying to @RafaTecXR

Hi! Will you add a WebXR mode?

(original)

-

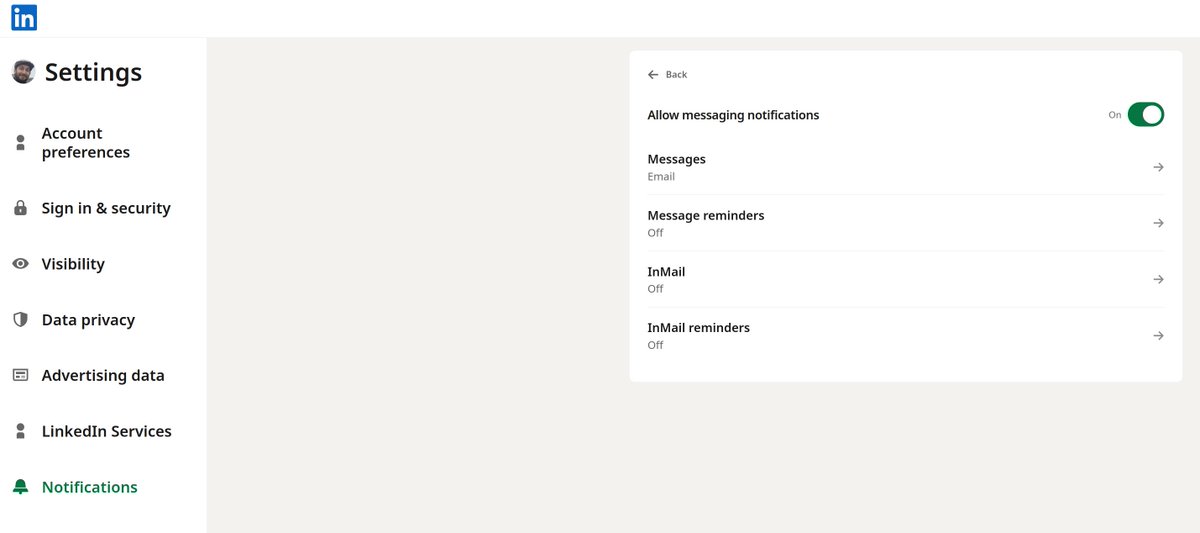

Seeing way WAY too much slop on LinkedIn. Generated or attention grabbing “content” that does not genuinely help me. So… I disabled ALL notifications except DM & switched to chronological feed w/o algorithmic suggestion.

Trying this for a month, let’s see!

🫸#enshittification

(original)

-

Replying to @utopiah

PS: inspired by “Won’t make a difference anyway as [higher ups as coders] had to chatgpt instead of listening to our suggestions” https://old.reddit.com/r/MachineLearning/comments/1hwbhuj/d_ml_engineers_whats_the_most_annoying_part_of/m60i75k/

(original)

-

Replying to @utopiah

s/quality/qualify/

(original)

-

Between XR and LLM, what is “reality” when we are COLLECTIVELY hallucinating?

Having a shared experience sustained over time is definitely not enough to quality as “real”.

(original)

-

Replying to @rcabanier

Neat, need to tinker with https://x.com/utopiah/status/1866459721797922961 again, especially with the open facial interface.

(original)

-

Replying to @utopiah

PS: the 3D printed model handle is https://www.printables.com/model/133055-warhammer-figure-painting-handle which I “flipped” around to hold this smaller piece.

(original)

-

Replying to @utopiah

That thing is so small I can’t get the focus right with the camera but… you get the idea. Was fun, will paint again.

(original)

-

Painted my 1st mini figurine!

I admit when I opened the starter set https://thearmypainter.com/products/warpaints-fanatic-starter-set by @theArmyPainter I was like “wow… that… thing is minuscule, no way I can paint that with my big fat fingers!” yet here I am. Nothing amazing but I did it :D

(original)

-

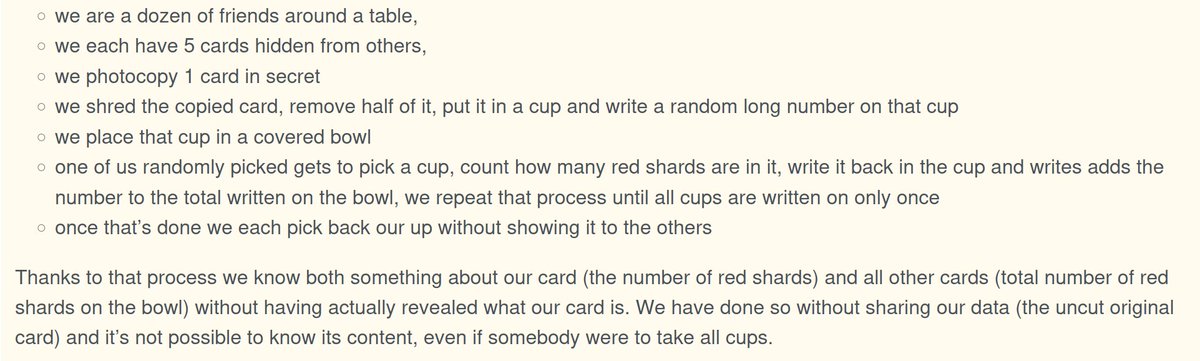

Trying to explain homomorphic encryption with an analogy, feedback appreciated :

https://lemmy.ml/post/24462544/15943262

(original)

-

Preview of a “full fullstack” prototyping presentation.

Showing here :

- welding (stool)

- wood working (cutting board)

- ceramic (coffee cup)

- PCB (light)

- software (Networked AFrame on RPi Zero)

- cardboard (even though not mine) for XR

Will add some 3D printing and more.

(original)

-

Replying to @Bhushanwtf

nodl vs rete

(original)

-

Replying to @Vrin_Librairie

cc @ya_lb

(original)

-

Replying to @Vrin_Librairie, @TropismesL and @filigranes365

Merci beaucoup, si une repond, je passe ! :D

(original)

-

@Vrin_Librairie Bonjour et bonne annee ! Avez-vous un revendeur de vos Cahiers Philosophiques a Bruxelles ?

Bien a vous,

Fabien(original)

-

Spam is back on X/Twitter. After moments of posting with little interactions, it’s back to “like” by 0 followers/0 following accounts with porn links.

(original)

-

Replying to @utopiah

Sure, we probably went from (made up numbers) barely usable in lots of cases 70%-ish accuracy to 90%-ish, nearly useful enough for most.

So that’s a real change.

It’s also not damn AI, AGI, even less ASI.

Useful progress, that isn’t free (tons of compute and R&D), though.

(original)

-

So funny (and sad) to listen to podcasts and hear hosts discuss how AI isn’t so hot… while still struggling to find actual use cases, it nearly always (check me) convert to text-to-speech.🤔

Yeah, TTS is genuinely useful!

We also had https://en.wikipedia.org/wiki/Dragon_NaturallySpeaking 27 years ago.

🤷♂️

(original)

-

In terms of business implementing homomorphic encryption at scale for ML makes me wonder if self-hosted solutions, e.g. @ImmichApp for photos, could benefit from extending their ML processing https://github.com/immich-app/immich/tree/main/machine-learning as a paid service.🤔 https://x.com/lukOlejnik/status/1851580203966906762

(original)

-

RT @lukOlejnik: Apple is expanding its use of privacy-preserving technology, homomorphic encryption. This computing approach enables proces…

(original)

-

Replying to @Bhushanwtf

Better than https://retejs.org ?

(original)

-

Replying to @MVdWSoftware

Definitely, I’m there too, also Bluesky https://fabien.benetou.fr/Contact/Contact but I still do not want enshittification to progress in platforms I still rely on.

Thought it was a good occasion to show how to easily shape back UX at the individual level too.

(original)