Tweet

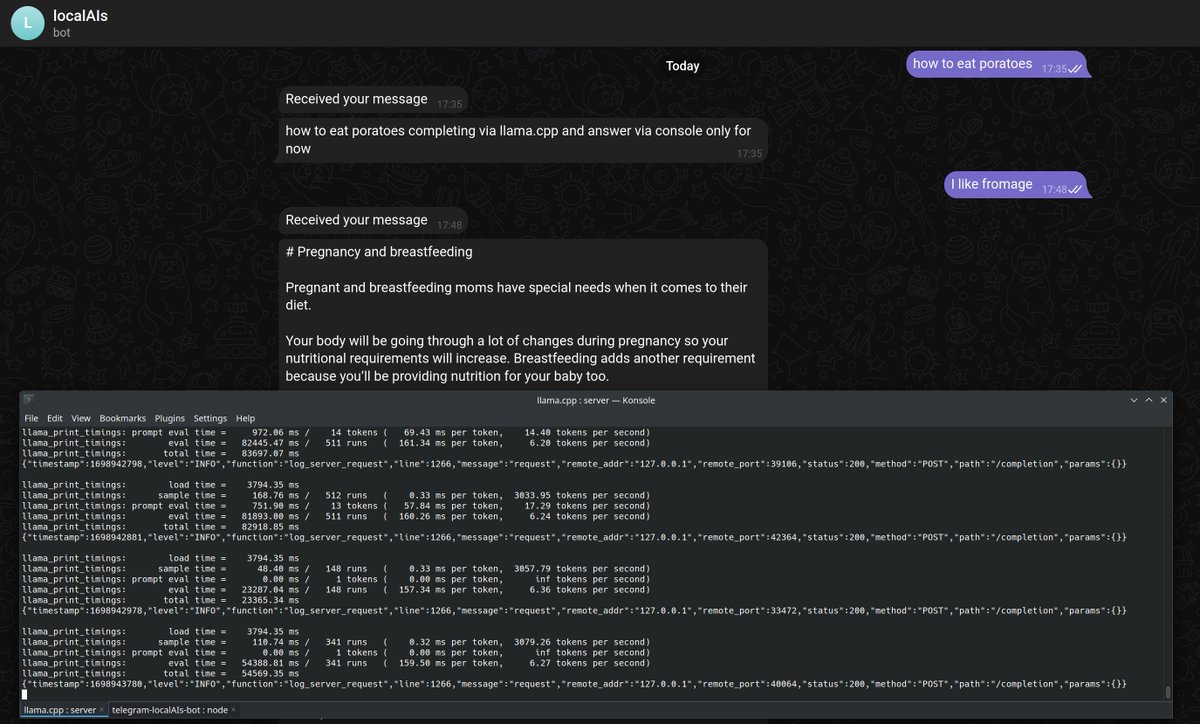

Well… that wasn’t very useful for me but sure😅

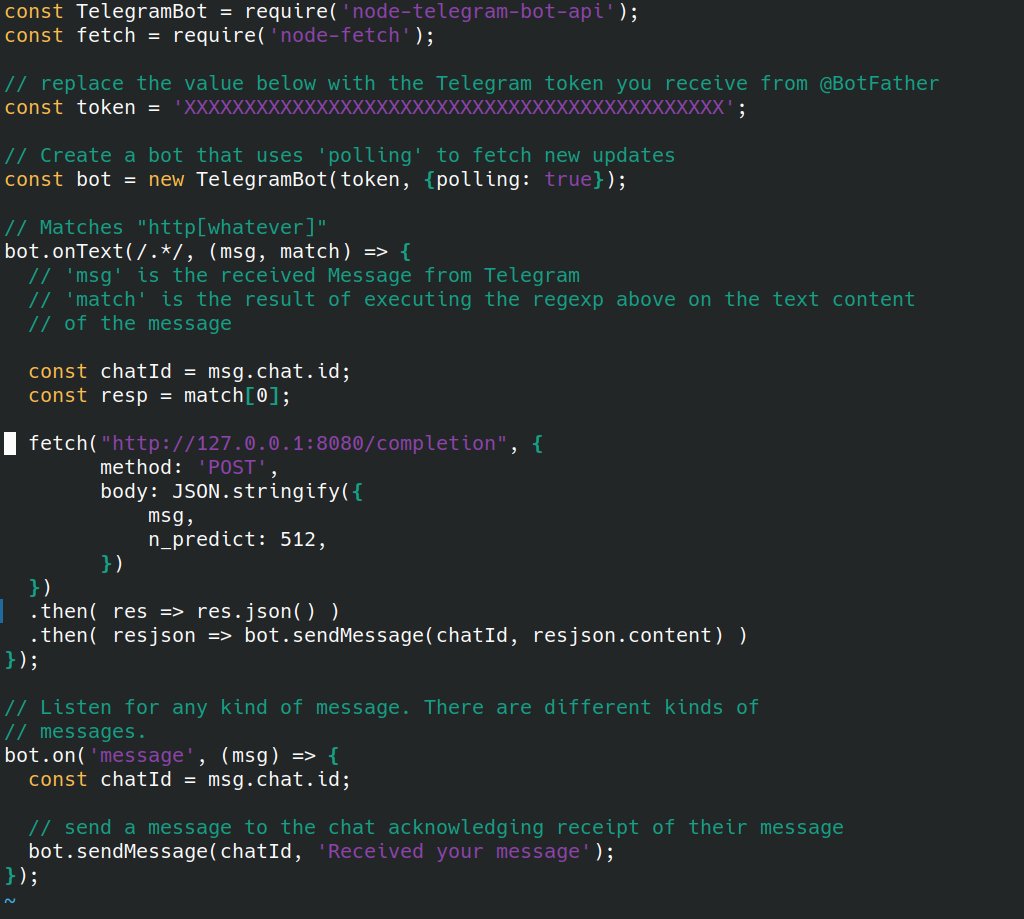

At least, it works! Namely I run a LLM locally, here @MistralAI via llama.cpp and using its server API pipe the result to my @telegram bot.

So I can “chat” with my “AIs” from my desktop, phone, any device with a browser.

(original)